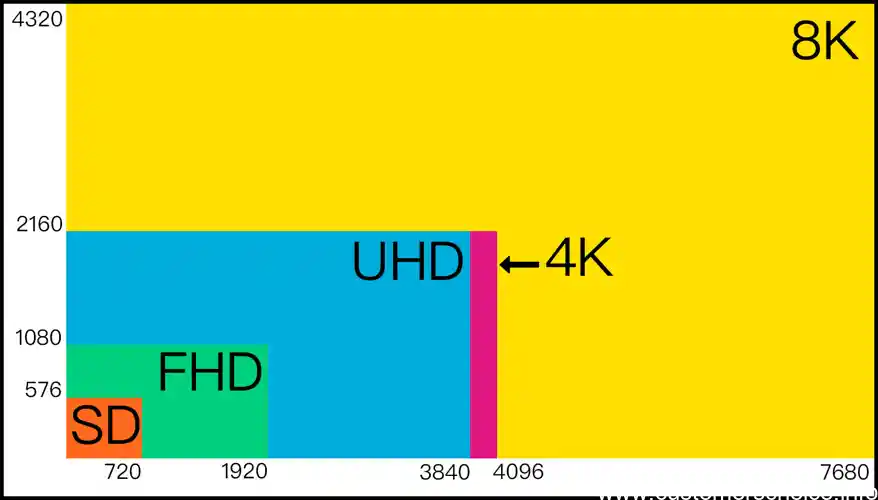

Thinking about upgrading your TV and wondering whether to choose Ultra HD or 4K? Let’s start with the basics. Ultra HD (UHD) refers to a resolution of 3840 x 2160 pixels (also called 2160p), while 4K represents a slightly higher resolution of 4096 x 2160 pixels, primarily used in the movie industry. While the terms are often used interchangeably, they are not identical. Ultra HD is commonly found in consumer TVs, offering high-definition viewing and, often, features like HDR. This guide will break down the technical differences between Ultra HD and 4K, helping you decide which one best suits your needs. By the end, you’ll be equipped to make an informed choice between these high-resolution options.

What is Ultra HD and 4K?

Ultra HD and 4K are frequently used to describe high-definition display technology, yet they aren’t identical. Ultra HD (UHD) is a consumer electronics term that generally refers to 4K resolution, which has four times the pixel count of a standard 1080p display. Technically, 4K resolution denotes 3,840 x 2,160 pixels, while Ultra HD broadly describes displays at this resolution. Although often used interchangeably, Ultra HD is more of a marketing term, while 4K aligns with cinema industry standards.

A new standard, UHD-1, aims to further define true 4K content, but currently, Ultra HD and 4K differ mainly in consumer perception rather than technical specifications. In the following sections, we’ll explore these differences to determine if Ultra HD offers advantages over 4K.

Definitions of Ultra HD and 4K

Ultra HD (UHD) and 4K are often used to describe high-resolution displays, but they have distinct definitions. 4K refers to a resolution standard of 3,840 x 2,160 pixels, which is four times the resolution of Full HD (1080p). Ultra HD generally refers to the same resolution, though it can also include resolutions higher than 3,840 x 2,160, such as 4,096 x 2,160 (typically in cinema).

The terms are often confusing because not all 4K displays meet Ultra HD specifications (which can include additional features like high dynamic range, or HDR). Before choosing between Ultra HD and 4K, check if a display meets the specific standards you need for resolution and quality. In essence, both terms address high-resolution displays, but Ultra HD may encompass additional image and sound enhancements.

Difference between Ultra HD and 4K

While 4K and Ultra HD are similar, they are not identical. 4K specifies a resolution of 3,840 x 2,160 pixels (four times Full HD), focusing strictly on pixel count. Ultra HD, while often using the same resolution, can include added technologies like HDR and a wider color gamut for richer image quality.

In summary, 4K defines the resolution standard, while Ultra HD may include enhancements that improve the viewing experience. When deciding which is better, consider compatibility, content availability, and budget. The right choice depends on the features and quality suited to your needs.

Technical Differences of Ultra HD vs. 4K

Ultra HD (UHD) and 4K differ mainly in resolution, pixel density, and color reproduction. While UHD generally refers to a resolution of 3,840 x 2,160 pixels, 4K specifically refers to 4,096 x 2,160 pixels, typically used in cinema. This slight difference impacts image quality, particularly on larger displays where higher pixel count enhances sharpness.

Ultra HD generally offers higher pixel density and supports HDR Premium for richer colors and contrast, improving overall image clarity and depth. These technical specifications make Ultra HD an attractive option for those seeking enhanced visual detail and color accuracy.

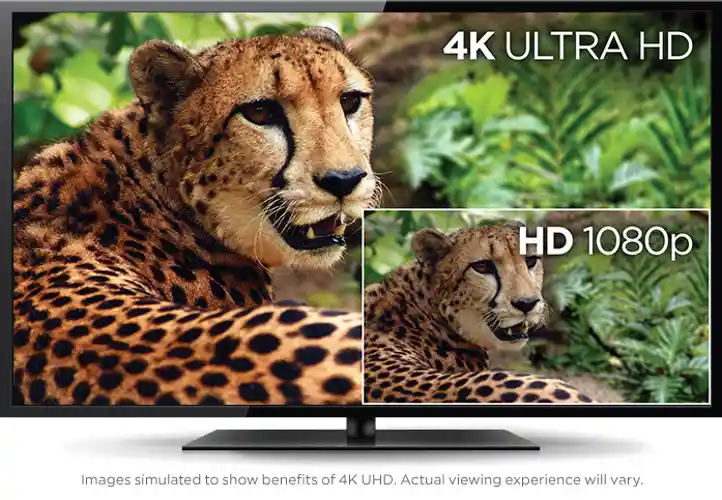

Resolution Differences

The primary difference between Ultra HD and 4K is resolution. 4K typically describes 4,096 x 2,160 pixels, used primarily in cinematic displays. Ultra HD, while often sharing the same resolution of 3,840 x 2,160 pixels, is a more inclusive term that also covers displays above 1080p but below true 4K.

In practical terms, most consumer displays labeled as Ultra HD meet the 3,840 x 2,160 standard, making them technically 4K-compatible. However, Ultra HD often includes enhancements like HDR, which can enhance color vibrancy. Ultimately, the right choice depends on your needs: 4K is suitable for gaming and fast-paced visuals, while Ultra HD may better serve video editing and color-critical work.

Pixel Density Differences

Ultra HD and 4K both offer higher pixel density than standard Full HD, yet Ultra HD often achieves greater pixel density due to enhancements like HDR and a broader color gamut. Higher pixel density in UHD means sharper visuals, especially on large screens, which can provide a noticeable improvement in clarity.

Device compatibility is crucial here: while most modern GPUs handle 4K displays smoothly, Ultra HD, especially with HDR, may demand more processing power. Content availability is another factor; 4K content is more widely available on platforms like Netflix and Amazon Prime compared to dedicated UHD content. Choose based on content availability and hardware compatibility.

Color Reproduction Differences

Ultra HD typically excels in color reproduction compared to 4K due to support for HDR Premium and a wider color gamut. This results in richer colors and better contrast, especially beneficial in color-critical fields like video editing. While 4K provides impressive clarity, Ultra HD’s advanced HDR enhances visual depth, creating a more vivid viewing experience.

However, Ultra HD displays and HDR support may come at a higher cost, and some content may not fully utilize HDR capabilities. Consider your content and device compatibility when deciding, as 4K currently has more available content, while Ultra HD shines with HDR-enhanced visuals.

Is Ultra HD Better than 4K?

Ultra HD and 4K both deliver high-resolution visuals, but Ultra HD offers some distinct advantages in certain areas. While both formats share similar resolutions, Ultra HD often includes enhanced color and contrast features, such as HDR, which can improve image quality and realism. However, choosing between Ultra HD and 4K ultimately depends on factors like content type, device compatibility, and budget.

Below, we’ll examine the pros and cons to help you decide which option better suits your needs.

Pros of Ultra HD over 4K

Ultra HD offers several advantages over standard 4K, particularly in image quality and color accuracy:

- Enhanced Color Reproduction: Ultra HD often includes support for HDR Premium, offering a broader color range and improved contrast, which can create more lifelike and vivid visuals.

- Better Clarity on Larger Screens: Ultra HD’s high pixel density provides sharper images, making it an ideal choice for large displays where detail is more noticeable.

- Broader Compatibility with HDR Content: Many Ultra HD screens are optimized for HDR streaming content available on platforms like Netflix and Disney+, which enhances the viewing experience.

These enhancements make Ultra HD particularly suited for users who prioritize image quality for video editing, streaming HDR content, or viewing on large displays.

Cons of Ultra HD Compared to 4K

Despite its advantages, Ultra HD also has some limitations compared to standard 4K:

- Higher Cost: Ultra HD displays with HDR support tend to be more expensive than standard 4K models, which may impact budget-conscious buyers.

- Limited Content Availability: While 4K content is widely available, dedicated Ultra HD HDR content can be more limited, which may restrict viewing options.

- Increased Hardware Demands: Ultra HD’s advanced features like HDR may require more powerful processing, potentially leading to higher GPU and device requirements.

In summary, Ultra HD provides enhanced image quality, but buyers should consider the additional costs and hardware compatibility before choosing it over 4K.

Choosing Between Ultra HD and 4K: Key Factors

When deciding between Ultra HD and 4K, several critical factors can guide your choice. Device compatibility, content availability, and budget are the main considerations. Understanding these aspects can help you select the option that best aligns with your specific viewing preferences and technical requirements.

Compatibility with Devices

Ultra HD and 4K differ slightly in device compatibility due to their specific resolutions and color enhancements. While most newer TVs and streaming devices support 4K resolution, Ultra HD with HDR may require more recent models that support HDR formats like Dolby Vision or HDR10. Additionally, gaming consoles and GPUs may vary in compatibility—some handle 4K smoothly but may struggle with Ultra HD HDR content, which requires higher processing power.

Check the compatibility of your devices with Ultra HD and HDR features before making a purchase, as this will ensure you can fully utilize the advanced features without needing additional upgrades.

Content Availability

Content availability is another key factor in choosing between Ultra HD and 4K. While 4K content is widely available on platforms like Netflix, Amazon Prime Video, and YouTube, Ultra HD content with HDR may be more limited. Streaming services often categorize content separately for HDR formats, which means finding HDR-compatible Ultra HD content may require more specific subscriptions or platforms.

Consider which streaming services and content libraries offer the Ultra HD HDR content you want to access. If HDR content is limited on your preferred platforms, standard 4K may be sufficient.

Budget Considerations

Cost is often a deciding factor between Ultra HD and 4K. Ultra HD TVs and monitors, especially those with HDR Premium or Dolby Vision support, tend to be more expensive than standard 4K models. If you are budget-conscious, you may find that a 4K display meets your needs without the additional cost of HDR support.

However, if image quality and future-proofing are priorities, investing in an Ultra HD display with HDR can provide long-term benefits, particularly as HDR content continues to grow in availability.

Conclusion: Which is Better, Ultra HD or 4K?

The choice between Ultra HD and 4K depends on your specific needs and viewing priorities. Both formats deliver high-resolution visuals, but they offer unique benefits depending on the context.

Ultra HD generally provides enhanced color reproduction and HDR support, making it ideal for those who value superior picture quality, especially on larger displays. It’s well-suited for home theater setups, high-end video editing, and HDR content streaming. However, Ultra HD displays typically come at a higher cost and may require compatible devices to fully utilize HDR features.

On the other hand, standard 4K offers great image quality at a more affordable price and is widely supported across devices and streaming platforms. If HDR or maximum color fidelity isn’t essential, 4K provides a strong viewing experience for general use, gaming, and content streaming without the need for additional hardware or subscription requirements.

In summary, Ultra HD is the better choice for users who prioritize image quality and have the budget for HDR capabilities, while 4K is ideal for those who want excellent resolution at a more accessible price point.

Q1. What is Ultra HD?

Ans: Ultra HD refers to a display resolution of 3840×2160 pixels four times the pixel count of 1080p Full HD.

Q2. What is 4K?

Ans: 4K is a display resolution of 3840×2160 pixels, also providing four times the resolution of 1080p Full HD.

Q3. Is Ultra HD the same as 4K?

Ans: Yes, Ultra HD and 4K commonly refer to the same resolution of 3840×2160 pixels. However, Ultra HD may sometimes indicate additional features, such as HDR or wider color gamut.

Q4. Is Ultra HD or 4K better?

Ans: Ultra HD and 4K offer the same resolution, so image quality is similar. However, Ultra HD displays with HDR or wider color gamut may provide better overall image quality than standard 4K.